TOP

Research

Research

AI for Science Platform Division

AI for Science Platform Division

Data Management Platform Development Unit

Data Management Platform Development Unit

Data Management Platform Development Unit

Japanese

Unit Leader Kento Sato

kento.sato[at]riken.jp (Lab location: Kobe)

kento.sato[at]riken.jp (Lab location: Kobe)- Please change [at] to @

- 2025

- Unit Leader, Advanced HPC Technologies Development Unit, Next-Generation HPC Infrastructure Development Division, RIKEN R-CCS (-present)

- 2024

- Unit Leader, Data Management Platform Development Unit, AI for Science Platform Division, RIKEN R-CCS (-present)

- 2018

- Team leader, High Performance Big Data Research Team, RIKEN R-CCS (-present)

- 2017

- Computer Scientist, Center for Applied Scientific Computing (CASC), Lawrence Livermore National Laboratory (LLNL), USA.

- 2014

- Postdoctoral Researcher, Center for Applied Scientific Computing (CASC), Lawrence Livermore National Laboratory (LLNL), USA.

- 2014

- Postdoctoral Researcher, Global Scientific Information and Computing Center (GSIC), Tokyo Institute of Technology, Japan

- 2014

- Ph.D. in Science, Department of Mathematical and Computing, Tokyo Institute of Technology, Japan.

Keyword

- Big Data Processing Platform

- Deep Learning Platform

- Fault Tolerance

- Performance evaluation/analysis

- HPC tools

Research summary

In the TRIP-AGIS project, R-CCS is operating high-performance computers and developing systems for their advancement in order to promote AI for Science research. In order to develop and utilize generative AI models (scientific foucation models) for scientific research that handle diverse data corresponding to multimodal AI models, our research unit is analyzing the performance of and developoing system software for the supercomputer "Fugaku" and AI-specific computers equipped with GPUs. We are also developing a data management infrastructure for efficient training, inference and utilization of the scientific foudation model. Furthermore, in conjunction with the automation technology developed by TRIP-AGIS, we are conducting research and development of foudamental technologies related to data to enable real-time processing of enormous amounts of diverse data. This enables high-speed training and inference cycles and aims to accelerate the development and utilization of scientific foudation models. Specifically, we are conducting the following research and development: (1) Optimization of data placement for training and inference using hierarchical memory/storage systems; (2) Research and development of high-performance and scalable fault-tolerant techniques for large-scale model training and inference; (3) Research and development of data compression techniques to improve data communication, transfer, management, model training, and inference; (4) Research and development on workflow systems to streamline model training, inference, and utilization; and (5) Other R&D to promote AI for Sciences research.

Main research results

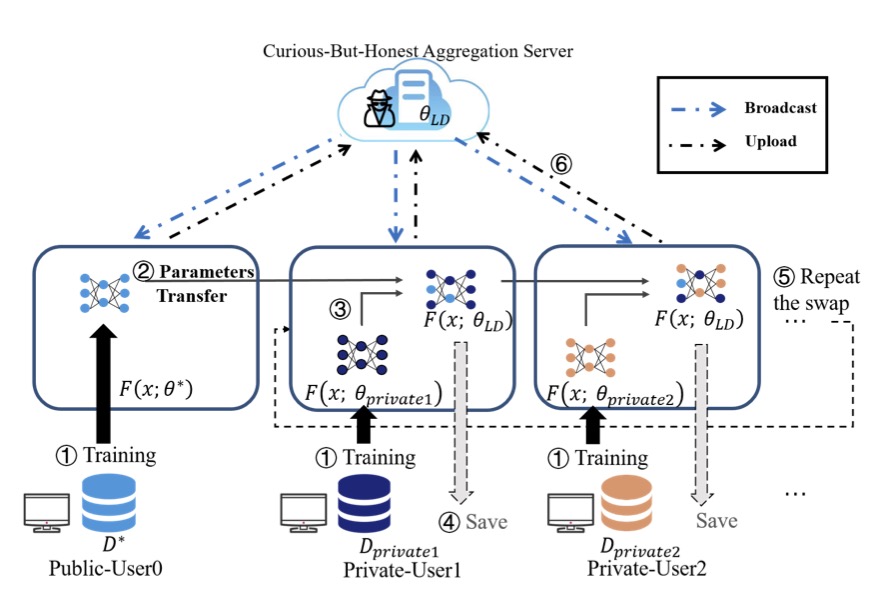

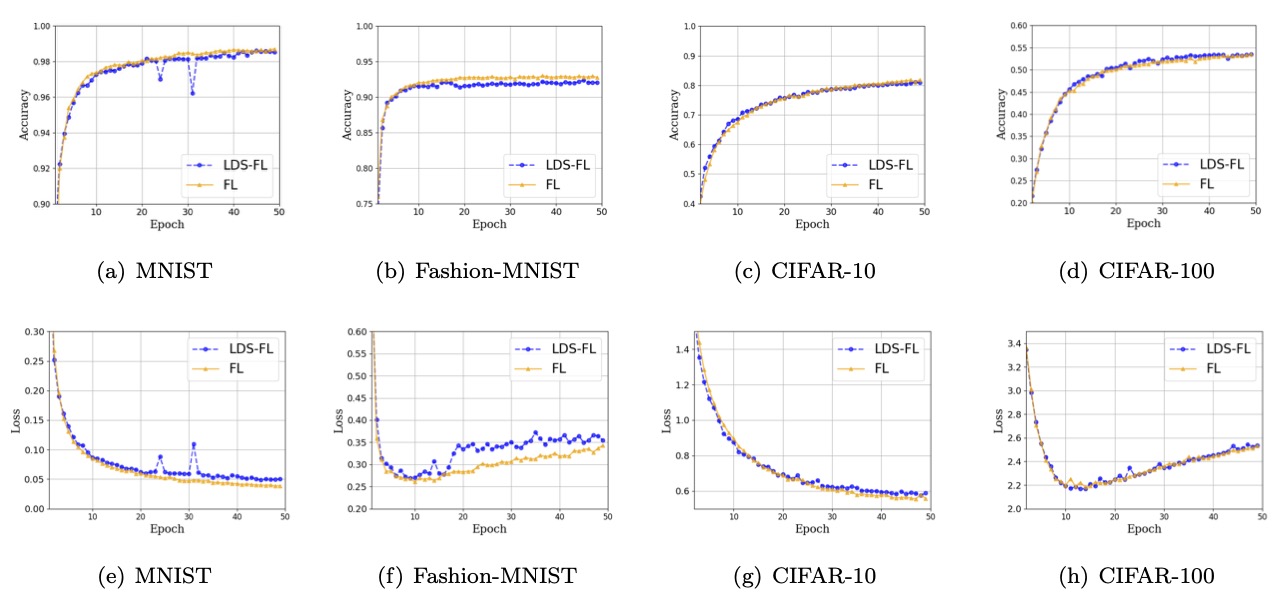

Federated Learning (FL) has attracted extraordinary attention from the industry and academia due to its advantages in privacy protection and collaboratively training on isolated datasets. Since machine learning algorithms usually try to find an optimal hypothesis to fit the training data, attackers also can exploit the shared models and reversely analyze users’ private information. However, there is still no good solution to solve the privacy-accuracy trade-off, by making information leakage more difficult and meanwhile can guarantee the convergence of learning. In this work, we proposed a Loss Differential Strategy (LDS) for parameter replacement in FL. The key idea of our strategy is to maintain the performance of the Private Model to be preserved through parameter replacement with multi-user participation, while the efficiency of privacy attacks on the model can be significantly reduced. To evaluate the proposed method, we conducted comprehensive experiments on four typical machine learning datasets to defend against membership inference attack. For example, the accuracy on MNIST is near 99%, while it can reduce the accuracy of attack by 10.1% compared with FedAvg. Compared with other traditional privacy protection mechanisms, our method also outperforms them in terms of accuracy and privacy preserving.

Representative papers

-

Taiyu Wang, Qinglin Yang, Kaiming Zhu, Junbo Wang, Chunhua Su, Kento Sato,

“LDS-FL: Loss Differential Strategy based Federated Learning for Privacy Preserving”

in IEEE Transactions on Information Forensics and Security, doi: 10.1109/TIFS.2023.3322328. , 2023 -

Satoru Hamamoto, Masaki Oura, Atsuomi Shundo, Daisuke Kawaguchi, SatoruYamamoto, Hidekazu Takano, Masayuki Uesugi, Akihisa Takeuchi, Takahiro Iwai, Yasuo Seto, Yasumasa Joti, Kento Sato, Keiji Tanaka & Takaki Hatsui

"Demonstration of efficient transfer learning in segmentation problem in synchrotron radiation X-ray CT data for epoxy resin"

Science and Technology of Advanced Materials: Methods, DOI: 10.1080/27660400.2023.2270529, 2023 -

Fu Xiang, Hao Tang, Huimin Liao, Xin Huang, Wubiao Xu, Shimeng Meng, Weiping Zhang, Luanzheng Guo and Kento Sato,

“A High-dimensional Algorithm-Based Fault Tolerance Scheme”

APDCM 2023, IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), St. Petersburg, Florida USA, 2023, DOI: 10.1109/IPDPSW59300.2023.00061 -

Takaaki Fukai, Kento Sato and Takahiro Hirofuchi,

“Analyzing I/O Performance of a Hierarchical HPC Storage System for Distributed Deep Learning”

The 23rd International Conference on Parallel and Distributed Computing, Applications and Technologies (PDCAT’22), December, 2022, Sendai, Japan -

Xi Zhu, Junbo Wang, Wuhui Chen, Kento Sato,

“Model compression and privacy preserving framework for federated learning”

Future Generation Computer Systems, 2022, ISSN 0167-739X, https://doi.org/10.1016/j.future.2022.10.026 -

Amitangshu Pal, Junbo Wang, Yilang Wu, Krishna Kant, Zhi Liu, Kento Sato,

“Social Media Driven Big Data Analysis for Disaster Situation Awareness: A Tutorial”

in IEEE Transactions on Big Data, doi: 10.1109/TBDATA.2022.3158431, Mar., 2022 -

Feiyuan Liang, Qinglin Yang, Ruiqi Liu, Junbo Wang, Kento Sato, Jian Guo,

“Semi-Synchronous Federated Learning Protocol with Dynamic Aggregation in Internet of Vehicles”

in IEEE Transactions on Vehicular Technology, doi: 10.1109/TVT.2022.3148872, Feb., 2022 -

Akihiro Tabuchi, Koichi Shirahata, Masafumi Yamazaki, Akihiko Kasagi, Takumi Honda, Kouji Kurihara, Kentaro Kawakami, Tsuguchika Tabaru, Naoto Fukumoto, Akiyoshi Kuroda, Takaaki Fukai and Kento Sato,

“The 16,384-node Parallelism of 3D-CNN Training on An Arm CPU based Supercomputer”

28th IEEE International Conference on High Performance Computing, Data, and Analytics (HiPC2021), Nov, 2021 -

Steven Farrell, Murali Emani, Jacob Balma, Lukas Drescher, Aleksandr Drozd, Andreas Fink, Geoffrey Fox, David Kanter, Thorsten Kurth, Peter Mattson, Dawei Mu, Amit Ruhela, Kento Sato,,Koichi Shirahata, Tsuguchika Tabaru, Aristeidis Tsaris, Jan Balewski, Ben Cumming, Takumi Danjo, Jens Domke, Takaaki Fukai, Naoto Fukumoto, Tatsuya Fukushi, Balazs Gerofi, Takumi Honda, Toshiyuki Imamura, Akihiko Kasagi, Kentaro Kawakami, Shuhei Kudo, Akiyoshi Kuroda, Maxime Martinasso, Satoshi Matsuoka, Kazuki Minami, Prabhat Ram, Takashi Sawada, Mallikarjun Shankar, Tom St. John, Akihiro Tabuchi, Venkatram Vishwanath, Mohamed Wahib, Masafumi Yamazaki, Junqi Yin and Henrique Mendonca,

“MLPerf HPC: A Holistic Benchmark Suite for Scientific Machine Learning on HPC Systems”

The Workshop on Machine Learning in High Performance Computing Environments (MLHPC) 2021 in conjunction with SC21, Nov, 2021 -

Rupak Roy, Kento Sato, Subhadeep Bhattacharya, Xingang Fang, Yasumasa Joti, Takaki Hatsui, Toshiyuki Hiraki, Jian Guo and Weikuan Yu,

“Compression of Time Evolutionary Image Data through Predictive Deep Neural Networks”

In the proceedings of the 21 IEEE/ACM International Symposium on Cluster, Cloud and Internet Computing (CCGrid 2021), May, 2021