5.7. Master-worker type job¶

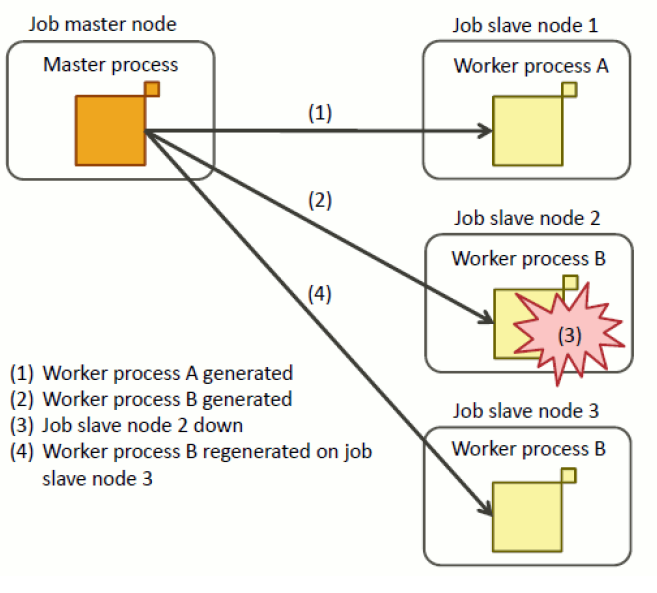

A master-worker type job is one of the job models with the following characteristics.

- It consists of job script process, master process and worker process.A master task and a worker process cooperate to execute a calculation task (processing unit of a parallel program).

The master process supervises the entire calculation task, creates and manages worker processes, and compiles the calculation results.

The worker process executes the calculation task requested by the master process and returns the result to the master process.

Even if the compute node assigned to the master-worker type job goes down or the process ends abnormally, the master-worker type job continues as long as the job script process is running.

Using this feature, users can continue the calculation task by creating a mechanism to re-execute the worker process on another node when the computing node goes down or the worker process ends abnormally.

See also

The node where the job script process runs is called the “job master node”, and the other nodes are called “job slave nodes”.The purpose of the master process is to manage worker processes that run on job slave nodes, so it must be run on the job master node.

A similar job model is “bulk job” in that it creates multiple processes. A bulk job is a method in which the same job script is submitted as multiple sub jobs. Each sub job operates independently. For bulk jobs, the number of sub-jobs is specified at job submission and does not change during execution. On the other hand, in the master-worker type job, the master process and each worker process operate while performing inter-process communication as one job. In addition, the worker process is dynamically generated by the master process, so the number changes during job execution.

For details on master-worker type jobs, see the manual “Job Operation Software End-user’s Guide Master-Worker Type Jobs”.

Three methods are supported in terms of how worker processes are generated:

- This method is used when both the master process and the worker process are MPI programs.The selection of the node that generates the worker process is done by the job operation software.

- This method is used when the worker process is not an MPI program.The user controls and manages the generation of worker processes and the selection of nodes that generate them.

- This method is used when the worker process is not an MPI program.The user controls and manages the selection of nodes that generate worker processes. However, pjaexe command provided by the job operation software is used to generate the worker processes.

5.7.1. Job Submission¶

To submit a job, specify --mswk option to pjsub command and job script which created on each mounting method.

[Style]

[_LNlogin]$ pjsub --mswk Job scriptAttention

Master-worker type jobs must use torus as the node allocation method. You can run Master-worker type jobs on any resource group that can specify torus.

The resource specification (

-L), node shape and so on must appear as arguments of pjsub command or in the job script if necessary.

--mswkoption of pjsub command cannot specify just the same as--stepoption,--bulkoption and--interactoptionIn the master worker type job, the node that generates the worker process is determined the parallel execution environment of the job operation software, or the user specifies it when generating the process. Thus, there is no meaning on specifying

--mpi rank-map-hostfileoption of pjsub command. If this option is specified, it is ignored.

5.7.2. Dynamic generation of worker processes¶

In the method of dynamically generating the worker process from the master process, the generation of the worker process and communication utilize the mechanism of MPI. The user must implement the following features:.

Master program (Master process)

Generate woker process

Request execution of worker process

Confirmation of worker process survival

Worker program (Worker process)

Receiving computation execution requests from master processes and sending computation results

Sending calculation completion notification to the master process

Some MPI functions and MPI subroutines in the MPI program executed in the master-worker type job need to be replaced with those for the master-worker type job. This is because the job operation software needs to perform processing specific to the master-worker type job.

The following are the names of these MPI functions and MPI subroutines.

MPI function name in the normal job |

MPI function name in the master-worker type job |

|---|---|

MPI_Comm_connect() |

FJMPI_Mswk_connect() |

MPI_Comm_disconnect() |

FJMPI_Mswk_disconnect() |

MPI_Comm_accept() |

FJMPI_Mswk_accept() |

Note

The above MPI functions for the master-worker type job are declared in the header file mpi-ext.h.

MPI subroutine name in the normal job |

MPI subroutine name in the master-worker type job |

|---|---|

MPI_COMM_CONNECT() |

FJMPI_MSWK_CONNECT() |

MPI_COMM_DISCONNECT() |

FJMPI_MSWK_DISCONNECT() |

MPI_COMM_ACCEPT() |

FJMPI_MSWK_ACCEPT() |

Note

The above MPI subroutines for the master-worker type job are declared in modules mpi_f08_ext and mpi_ext. Modules mpi_f08_ext and mpi_ext correspond to MPI modules mpi_f08 and mpi respectively, so you can quote either in the USE statement.

Attention

To use the master-worker type job, use the language environment version ‘4.5.0 tcsds-1.2.31’ or later.

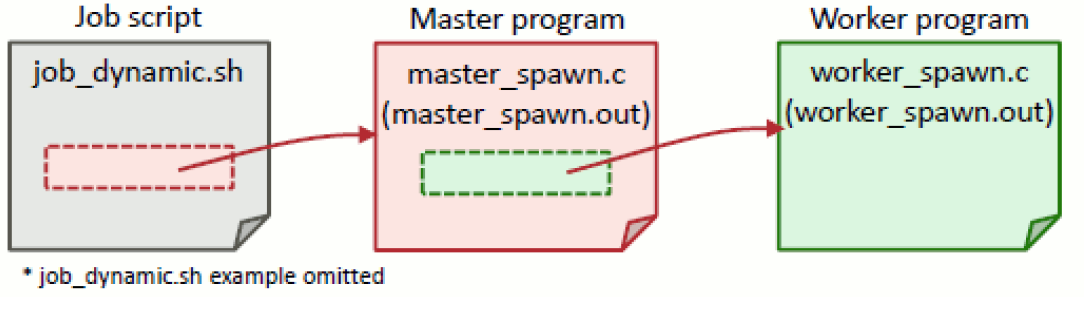

[Master program master_spawn.c]

#include <mpi.h>

#include <mpi-ext.h>

#include <stdio.h>

#include <string.h>

int main(int argc, char **argv) {

int world_size, universe_size;

int *universe_size_p;

int flag;

MPI_Status status;

MPI_Comm worker_comm;

char master_port[MPI_MAX_PORT_NAME] = "";

char worker_port[MPI_MAX_PORT_NAME] = "";

// Each communicater route rank

const int self_root = 0; // SELF (MPI_COMM_SELF)

const int master_root = 0; // MASTER (MPI_COMM_WORLD)

const int worker_root = 0; // WORKER (worker_comm)

const int tag = 0;

char *message = "Hello";

// Initialization

MPI_Init(&argc, &argv);

// Get world_size, universe_size

MPI_Comm_size(MPI_COMM_WORLD, &world_size);

if (world_size != 1) {

// When there are multiple master processes

fprintf(stderr, "Error! world_size=%d (expected 1)", world_size);

MPI_Abort(MPI_COMM_WORLD, 1);

}

MPI_Comm_get_attr(MPI_COMM_WORLD, MPI_UNIVERSE_SIZE, &universe_size_p, &flag);

if (flag == 0) {

// If Faild to get universe_size

fprintf(stderr, "Error! cannot get universe_size");

MPI_Abort(MPI_COMM_WORLD, 1);

}

universe_size = *universe_size_p;

printf("universe_size=%d\n", universe_size);

if (universe_size == 1) {

// If universe_size is 1

fprintf(stderr, "Error! universe_size=%d (expected > 1)", universe_size);

MPI_Abort(MPI_COMM_WORLD, 1);

}

// Open a port for communication with the worker process.

MPI_Open_port(MPI_INFO_NULL, master_port);

printf("master_port=%s\n", master_port);

// Generate worker process

MPI_Comm_spawn("./worker_spawn.out", MPI_ARGV_NULL, universe_size - 1,

MPI_INFO_NULL, self_root, MPI_COMM_SELF, &worker_comm, MPI_ERRCODES_IGNORE);

// Send a port name to worker process

MPI_Send(master_port, MPI_MAX_PORT_NAME, MPI_CHAR, worker_root, tag, worker_comm);

// Disconnect the connection with woker process

FJMPI_Mswk_disconnect(&worker_comm);

// Recieve data from worker process (A port name of worker process will be sent)

FJMPI_Mswk_accept(master_port, MPI_INFO_NULL, self_root, MPI_COMM_SELF, &worker_comm);

MPI_Recv(worker_port, MPI_MAX_PORT_NAME, MPI_CHAR, worker_root, tag, worker_comm, &status);

printf("worker_port=%s\n", worker_port);

FJMPI_Mswk_disconnect(&worker_comm);

// Send data to worker process

FJMPI_Mswk_connect(worker_port, MPI_INFO_NULL, self_root, MPI_COMM_SELF, &worker_comm);

MPI_Send(message, strlen(message) + 1, MPI_CHAR, worker_root, tag, worker_comm);

FJMPI_Mswk_disconnect(&worker_comm);

// Closing process

MPI_Close_port(master_port);

MPI_Finalize();

}

[Worker program worker_spawn.c]

#include <mpi.h>

#include <mpi-ext.h>

#include <stdio.h>

int main(int argc, char **argv) {

int rank;

MPI_Status status;

MPI_Comm master_comm;

char master_port[MPI_MAX_PORT_NAME] = "";

char worker_port[MPI_MAX_PORT_NAME] = "";

// Each communicater route rank

const int self_root = 0; // SELF (MPI_COMM_SELF)

const int master_root = 0; // MASTER (master_comm)

const int worker_root = 0; // WORKER (MPI_COMM_WORLD)

const int tag = 0;

char message[100] = "";

// Initialize

MPI_Init(&argc, &argv);

MPI_Comm_get_parent(&master_comm);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

printf("Hello! rank=%d\n", rank);

if (rank == worker_root) {

MPI_Open_port(MPI_INFO_NULL, worker_port);

printf("worker_port=%s\n", worker_port); fflush(stdout);

}

if (rank == worker_root) {

// Recieve data from master process

MPI_Recv(master_port, MPI_MAX_PORT_NAME, MPI_CHAR, master_root, tag, master_comm, &status);

printf("master_port=%s\n", master_port); fflush(stdout);

}

// Disconnect the connection with master process

FJMPI_Mswk_disconnect(&master_comm);

// Send a port name to master process

if (rank == worker_root) {

FJMPI_Mswk_connect(master_port, MPI_INFO_NULL, self_root, MPI_COMM_SELF, &master_comm);

MPI_Send(worker_port, MPI_MAX_PORT_NAME, MPI_CHAR, master_root, tag, master_comm);

FJMPI_Mswk_disconnect(&master_comm);

}

// Recieve data from master process

if (rank == worker_root) {

FJMPI_Mswk_accept(worker_port, MPI_INFO_NULL, self_root, MPI_COMM_SELF, &master_comm);

MPI_Recv(message, MPI_MAX_PORT_NAME, MPI_CHAR, master_root, tag, master_comm, &status);

printf("message=%s\n", message);

FJMPI_Mswk_disconnect(&master_comm);

}

// Closing process

if (rank == worker_root) {

MPI_Close_port(worker_port);

}

MPI_Finalize();

}

The dynamic worker process generation method requires the --mpi "shape=1" --mpi "proc=1" option of the pjsub command to be specified so that the master process generated at the start of the MPI program starts only on the job master node.

The following example assigns 385 nodes to a job and 1 node to the master process generated when the MPI program is started. The remaining 384 nodes are used to dynamically generate worker processes.

[_LNlogin]$ mpifccpx -o worker_spawn.out woker-spawn.c

[_LNlogin]$ mpifccpx -o master-spawn.out master-spawn.c

[_LNlogin]$ cat job_dynamic.sh

#!/bin/bash -x

#PJM -L "node=385"

#PJM -L "rscgrp=large"

#PJM -L "elapse=10:00"

#PJM --mpi "shape=1"

#PJM --mpi "proc=1"

#PJM -g groupname

#PJM -x PJM_LLIO_GFSCACHE=/vol000N

#PJM -s

export PLE_MPI_STD_EMPTYFILE=off

mpiexec -stdout-proc ./output.%j/%/1000r/stdout -stderr-proc ./output.%j/%/1000r/stderr ./master-spawn.out

[_LNlogin]$ pjsub --mswk job_dynamic.sh

5.7.3. Worker process creation request to Agent prosess¶

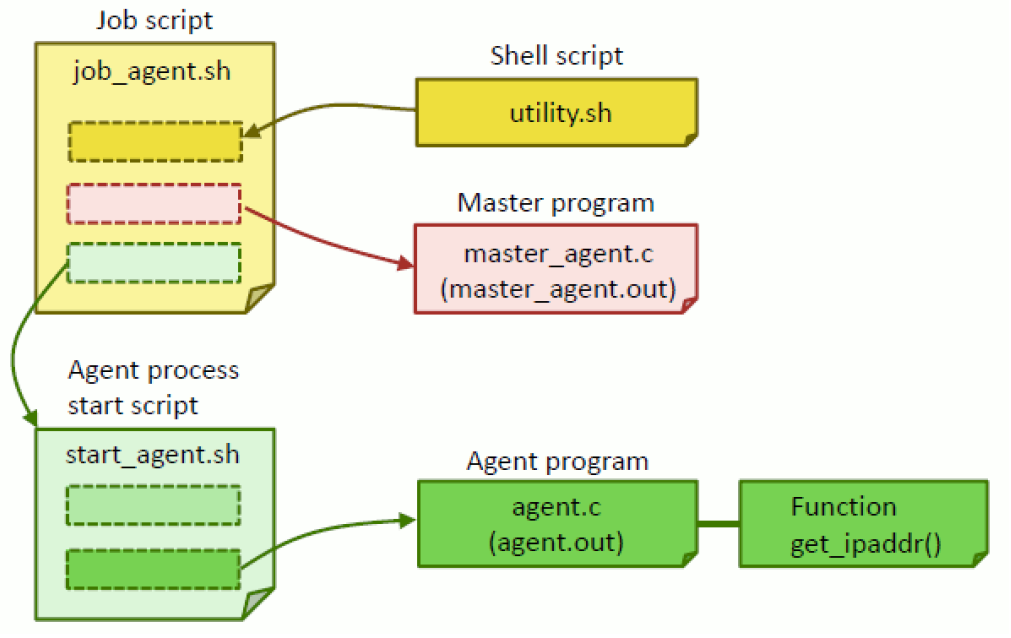

The method of creating worker processes from the Agent process (hereinafter referred to as Agent process method) is used when the worker process is a non-MPI program. In the Agent process method, the number of nodes assigned to the job and the number of processes generated by the mpiexec command must be same so that only one Agent process is generated on each node.

In this method, the user must implement the following functions for the job:

Job script

Execute master program which is master process

Agent process generation

Wait for master process termination

Master program (Master process)

Agent process survival check

Request worker agent process generation to process

Agent program (Agent Process)

Establish communication with the master process

Creating worker processes

Send processing result of worker process to master process

Here is an example of the program shown in the figure below.

[Job script job_agent.sh]

#!/bin/bash

#PJM -L "node=385"

#PJM -L "rscgrp=large"

#PJM -L "elapse=10:00"

#PJM --mpi "proc=385"

#PJM -g groupname

#PJM -x PJM_LLIO_GFSCACHE=/vol000N

. utility.sh

# Create a master process.

./master_agent.out port_num.txt &

MASTER_PID=$!

PORT_NUM=$(cat port_num.txt)

# Get the IP address of the job master node (this node).

IP_ADDR=$(print_ipaddr "tofu1")

# Create an Agent process with the mpiexec command.

mpiexec start_agent.sh "${IP_ADDR}" "${PORT_NUM}" &

wait ${MASTER_PID}

[Master program master_agent.c]

#include <stdio.h>

#include <sys/types.h>

#include <sys/socket.h>

int main(int argc, char **argv)

{

char *port_file = argv[1];

int sockfd = socket(...); // Create a socket.

bind(sockfd, ...); // Bind a socket to a specific port.

listen(sockfd, ...); // Wait for connection from worker process.

FILE *fp = fopen(port_file, "w");

fprintf(fp, "%d", port_number); // Write a port number to file.

fclose(fp);

while (1) {

accept(sockfd, ...); // Accept the connection from woker process.

... // Request the processing to worker process

}

}

[Agent process start up script start_agent.sh]

#!/bin/bash

./agent.out $@

[Agent program agent.c]

#include <string.h>

#include <stdlib.h>

int main(int argc, char **argv)

{

// Substitute the IP address and port number of the job master node given in the command line argument.

char *ip_addr = argv[1];

int port_num = atoi(argv[2]);

char *my_ip_addr;

get_ipaddr("tofu1", &my_ip_addr);

// If the current node is a job master node (if it has the same IP address as the job master node),

// terminate the Agent process.

if (strcmp(my_ip_addr, ip_addr) == 0) {

exit(0);

}

// Connetc to job master node (use socket connection, etc.)

connect_to(ip_addr, port_num);

// Proceed depending on job master node if necessary.

...

}

[get_ipaddr()function]

get_ipaddr() function is the function returns the IP address of the local node as a character string.

#include <string.h>

#include <unistd.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <sys/ioctl.h>

#include <netinet/in.h>

#include <net/if.h>

#include <arpa/inet.h>

int get_ipaddr(const char *device_name, const char **ip_addr)

{

int fd;

struct ifreq ifr;

fd = socket(AF_INET, SOCK_DGRAM, 0);

ifr.ifr_addr.sa_family = AF_INET;

strncpy(ifr.ifr_name, device_name, IFNAMSIZ - 1);

int rc = ioctl(fd, SIOCGIFADDR, &ifr);

if (rc == 0) {

*ip_addr = inet_ntoa(((struct sockaddr_in *)&ifr.ifr_addr)->sin_addr);

}

close(fd);

return rc;

}

[utility.sh]

# Outputs the IP address of the specified network interface.

# Usage: print_ipaddr <interface>

print_ipaddr() {

local INTERFACE=$1

LANG=C ip addr show dev "${INTERFACE}" | sed -n '/.*inet \([0-9.]*\).*/{s//\1/;p}'

}

# Executes the specified command with a timeout.

# Usage: timeout <timeout_sec> <command> <arg1> <arg2> ...

timeout() {

local TIMEOUT=$1

shift 1

# Record command execution and process ID

eval "$@" &

local PID=$!

echo ${PID}

while true; do

# Check the survival of the command process.

if ! ps -p "${PID}" >/dev/null 2>&1; then

# Get out of loop because process is end.

break

fi

if [ "${TIMEOUT}" -le 0 ]; then

# If time out, end process abnormally.

kill -KILL "${PID}"

break

fi

# Back to the head of loop if sleep for 1 second.

sleep 1

TIMEOUT=$((TIMEOUT - 1))

done

# Return process end code.

wait "${PID}"

return $?

}

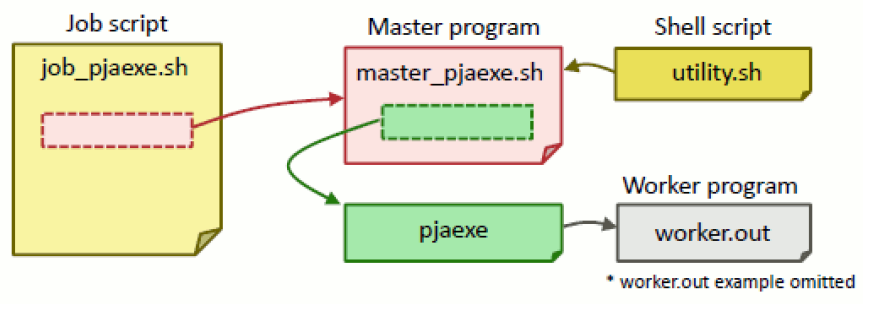

5.7.4. Worker process generation by pjaexe command¶

To create worker process, there is a way like using pjaexe command offered by job operation software other than creating woker process by Agent process. Use this method for non-MPI programs.

With this method, the user must implement the following features:

Job script

Start up worker process by pjaexe command

Master program (Master process)

Confirmation of worker process existence (determined by the presence or absence of connection from worker process)

Request for task execution to worker process

Woker program (Worker process)

Establish connection with master process

Send calculation results to master process

Here is an example of the program shown in the figure below.

[Job script job_pjaexe.sh]

#!/bin/bash

#PJM -L "node=385"

#PJM -L "rscgrp=large"

#PJM -L "elapse=10:00"

#PJM -g groupname

#PJM -x PJM_LLIO_GFSCACHE=/vol000N

# Execute master process

./master_pjaexe.sh

[Master program master_pjaexe.sh]

#!/bin/bash

. utility.sh

# Get job master node IP address (this node)

IP_ADDR=$(print_ipaddr "tofu1")

# Initialize the socket to enable acceptiong connection from woker process

PORT_NUM=port number

...

# Start up worker process worker.out at all job slave node.

for X in $(seq 0 11); do

for Y in $(seq 0 7); do

VCOORD="(${X},${Y})"

# If the pjaexe command does not return within 60 seconds, it will time out.

timeout 60 pjaexe --vcoord \"${VCOORD}\" ./worker.out "${IP_ADDR}" "${PORT_NUM}"

RC=$?

if [ "${RC}" -eq 1 ]; then

# Close master process abnormally if miss specifying by the user.

exit 1

fi

if [ "${RC}" -ne 0 ]; then

# If pjaexe command closed abnormally, decide as the node is down and add to issued node list.

echo "${VCOORD}" >> broken_node_list.txt

fi

done

done

# Communication with Woker process worker.out and summary processing of calculation results

...<Omitted> ...

# Close master process

exit

See also

Normally, the program that becomes the master process is written in a programming language such as C or Fortran, but here, an example of implementing the master program with a shell script is shown to explain the processing logic. Processing that is difficult to implement with shell scripts (socket initialization processing and communication processing with worker processes) is omitted. For these processes, refer to general means for interprocess communication.

[utility.sh]

# Output IP address of specified network interface.

# Usage: print_ipaddr <interface>

print_ipaddr() {

local INTERFACE=$1

LANG=C ip addr show dev "${INTERFACE}" | sed -n '/.*inet \([0-9.]*\).*/{s//\1/;p}'

}

# Execute the specified command with time out

# Usage: timeout <timeout_sec> <command> <arg1> <arg2> ...

timeout() {

local TIMEOUT=$1

shift 1

# Record command execution and process ID

eval "$@" &

local PID=$!

echo ${PID}

while true; do

# Check the survival of the command process.

if ! ps -p "${PID}" >/dev/null 2>&1; then

# Get out of loop since process ends.

break

fi

if [ "${TIMEOUT}" -le 0 ]; then

# Close the process abnormally if time out.

kill -KILL "${PID}"

break

fi

# Back to the head of loop after sleeping for 1 second.

sleep 1

TIMEOUT=$((TIMEOUT - 1))

done

# Return process end code.

wait "${PID}"

return $?

}

5.7.5. Notes on job creation¶

A maximum of 128 pjaexe commands can be executed concurrently in one job.

If you try to run more than 128, pjaexe terminates abnormally with the following message.

[ERR.] PLE 0050 plexec cannot be executed any further.

- The pjaexe command can simultaneously generate processes on multiple nodes in a single run by using the –vcoordfile option. Use this command to reduce the number of times the pjaexe command is executed.[vcoordfile]

(0) (1) (2) (3) (4)

[command line]

pjaexe --vcoordfile vcoordfile ./worker.out "${IP_ADDR}" "${PORT_NUM}"

- It is the job creator’s responsibility to create worker processes, detect anomalies, and deal with them.

- In the method of dynamically generating worker processes, if the node on which the worker process is running goes down, all worker processes belonging to the same MPI_COMM_WORLD as the worker process on that node cannot operate. These worker processes remain until the user ends or the job ends.Also, the node where these worker processes were running is not selected as the worker process regeneration destination until mpiexec command ends. However as soon as mpiexec command re-executes, note that the down node may be selected again as the worker process destination.

Note the following when using MPI communication functions in a master-worker type job.

According to the MPI standard, when MPI communication processing fails, the communication function caller process also terminates abnormally by default. (For example, when the communication destination process ends abnormally during processing or the communication destination node goes down)

This communication process may be executed not only when the user explicitly calls MPI communication functions such as MPI_Send (), MPI_Recv (), and MPI_Bcast (), but may also be executed by the internal processing of the MPI library. In such a case, it appears to the program that the master process suddenly terminates abnormally while the master process is executing a process unrelated to communication.

When using the MPI communication function in a master worker type job, take the following actions so that the master process does not end abnormally when the worker process ends abnormally. This reduces the probability of the master process terminating abnormally.

- After calling the MPI_Comm_spawn () function, call the FJMPI_Mswk_disconnect () function.When a process is dynamically created by the MPI_Comm_spawn () function, communication is connected between the created process and the calling process of the MPI_Comm_spawn () function.MPI’s internal communication processing occurs if this communication is in the connected state and does not occur if disconnected. For this reason, FJMPI_Mswk_disconnect () must be called after calling MPI_Comm_spawn ().

- Call FJMPI_Mswk_connect () or FJMPI_Mswk_accept () before calling the MPI communication function, and call FJMPI_Mswk_disconnect () after calling the MPI communication function.If the connection destination process has terminated abnormally, the FJMPI_Mswk_connect (), FJMPI_Mswk_accept (), and FJMPI_Mswk_disconnect () functions will only return abnormally and the calling process will not terminate abnormally.Therefore, it is necessary to call the FJMPI_Mswk_connect () function every time before calling the MPI communication function as shown in the following example. This reduces the impact of worker process errors on the master process.

//[Example] When connect from master process to worker process // Master process FJMPI_Mswk_connect(worker_port, …, &worker_comm); MPI_Send(…, worker_comm, …); FJMPI_Mswk_disconnect(&worker_comm); // Woker process FJMPI_Mswk_accept(worker_port, …, &master_comm); MPI_Recv(…, master_comm, …); FJMPI_Mswk_disconnect(&master_comm);

If the above procedure is not followed, the master process may terminate abnormally at any timing after the worker process terminates abnormally or after the node where the worker process is running goes down. Once master process closed abnormally, also mpiexec command does so too. However, the job script continues to run.

5.7.6. Impact when system failure¶

This section describes the effects of an error caused by the system such as a node failure during execution of a master worker type job.

5.7.6.1. Impact to job work¶

Depending on the nature of the error, the master-worker type job may end or continue.

The case that master worker type job closes

In the following cases, as with other job models, the master worker type job ends and is requeued.

When a job master node is down

When an ICC or Port failure occurs on a compute node assigned to a master worker type job

When the administrator (cluster administrator) specifies to terminate the job immediately when the node assigned to the master worker type job is disconnected from operation

When the BIO/SIO/GIO allocated by the job using the shared temporary area or the cache area of the second-layer storage goes down

- The case that master worker type job continues

In the following cases, the master worker type job continues. However, if a worker process becomes abnormal due to these reasons, it is necessary to consider measures such as executing the worker process on another node in the user program.

Service error of job operation software on job slave node

Job slave node down

Hadware (CPU or memory) error on job slave node

See also

If the cause of job termination is on the user side (ex: resource limit values such as CPU time exceeded), whether or not the job is requeued is specified at the time of job submission and job ACL, as with other job models. It depends on the function setting.

5.7.6.2. Impact on job statistics¶

If a node failure occurs during execution of a master worker type job, the job statistics output by pjsub -s/-S and pjstat -v are as follows.

Item

Description

If a master-worker type job ends abnormally due to a job master node going down or Fugaku failure (ICC error), the job completion code in the job statistics will be the same as that for a normal job. If the error does not affect the continuation of the master-worker type job, such as when only the job slave node is down, the master-worker type job is executed to the end. If closed with no error, end job code is 0.

Since this is the same as end code, if job closed with no error, it will be “-“.

Items with “(REQUIRE)” such as “NODE NUM (REQUIRE)” are the values specified by the user when the job is submitted, regardless of whether the node is down.

Items with “(ALLOC)” such as “NODE NUM (ALLOC)” are the values determined when the job is submitted, regardless of whether the node is down.

Items with “(USE)” such as “NODE NUM (USE)” are excluded values for the failed node.

5.7.6.3. Impact to command displyaing¶

pjshowrsc command, offered by job operation software, can display the number of nodes as computer resources.

If a node assigned to a master worker type job goes down during job execution, the down node is excluded from available resources.

Without argument

The TOTAL and ALLOC values are reduced by the number of nodes that are down.

[Before job slave node is down]

[_LNlogin]$ pjshowrsc [ CLST: fugaku-comp ] RSCUNIT NODE TOTAL FREE ALLOC rscunit_ft01 158976 94269 64707[After 1 node of job slave node is down]

[_LNlogin]$ pjshowrsc [ CLST: fugaku-comp ] RSCUNIT NODE TOTAL FREE ALLOC rscunit_ft01 158975 94269 64706

When specified

-loption

The TOTAL and ALLOC values for each resource are reduced by the number of nodes that are down.

[Before job slave node is down]

[_LNlogin]$ pjshowrsc -l [ CLST: fugaku-comp ] [ RSCUNIT: rscunit_ft01 ] RSC TOTAL FREE ALLOC node 158976 75231 83745 cpu 7630848 3611088 4019760 mem 4.4Pi 4.4Pi 672Gi[After 1 node of job slave node is down]

[_LNlogin]$ pjshowrsc -l [ CLST: fugaku-comp ] [ RSCUNIT: rscunit_ft01 ] RSC TOTAL FREE ALLOC node 158975 75231 83744 cpu 7630800 3611088 4019712 mem 4.4Pi 4.4Pi 672Gi