8.4. Node Temporary Area¶

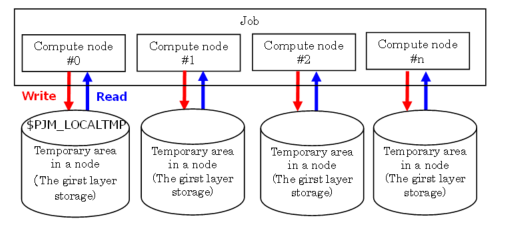

Provides a node temporary area as a temporary area for files used locally in the same job process in the same job. This area can only be referenced within one compute node.

Node temporary area cannnot shered by the multiple compute node.

Node temporary area is available when job starting and deleted when job closing. Save such as job execution result files must be placed on the second-layer storage cache area or 2ndfs area.

To use node temporary area, when job submiting, it is required to specify the preferred area size with

--llio localtmp-sizeof pjsub command option.The name of node temporary area can be refered with environment variable

${PJM_LOCALTMP}in a job.

Here describes style of temporary area in node

Style of temporary area in node¶ Item

Characteristic

Reference area

Of the compute nodes assigned to a job, it can be referenced only from the same job running on that compute node.

Lifetime

Initialized before job starts and deleted after job closing.

8.4.1. Node temporary area size¶

When using a node temporary area, its size can be specified when submitting a job.

The parameter localtmp-size of the --llio option of the pjsub command specifies the size of the temporary area in the node per node. If you do not specify the size, it will be the default value (0MiB) set by the job ACL function.

Area name |

Option |

Specification value |

|---|---|---|

Temporary area in node |

--llio-localtmp-size |

Temporary area size in node per 1 node |

Please specify the size so that it meets the following.At least 128MiB must be assigned to The Cache Area of Second-Layer Storage size.:

128MiB <= 87GiB - (localtmp-size + sharedtmp-size)

8.4.2. How to use¶

By specifying option when job submission, temporary area in node can be used

Submit a job.

$ pjsub --llio localtmp-size=30Gi jobscript.shAlternatively, you can specify the size of the node temporary area in the job script.

#!/bin/bash #PJM --llio localtmp-size=30Gi

The path name (directory name) of the node temporary area is set in the environment variable PJM_LOCALTMP. You can find out with the environment variable PJM_LOCALTMP set in the job. The following is an example of a simple job that uses the node temporary area as a temporary data storage destination.

prog1 -o ${PJM_LOCALTMP}/out.data

Program prog2 reads file out.data and output the final computed result result.data to directory

${PJM_LOCALTMP}.prog2 -i ${PJM_LOCALTMP}/out.data -o ${PJM_LOCALTMP}/result.data

Before ending the job, save the file result.data in the directory

${PJM_JOBDIR}(on the global file system) at the time of job execution with the job ID $ {PJM_JOBID} appended.cp ${PJM_LOCALTMP}/result.data ${PJM_JOBDIR}/result_${PJM_JOBID}.data

When copying a file from the second tier storage to the temporary area in the node, copy using only one process in the node. Here is an example of copying.

mpiexec sh -c 'if [ ${PLE_RANK_ON_NODE} -eq 0 ]; then cp -rf ./data/ ${PJM_LOCALTMP} ; fi'

You can create a different output file for each parallel process in the node temporary area. The rank number in the program executed by mpiexec is set in the environment variable PMIX _ RANK. Here is an example using the touch command:

- Create a file for each process.

mpiexec sh -c 'touch ${PJM_LOCALTMP}/result_${PMIX_RANK}.data'

Save different output files for each parallel process from the node temporary area to the second layer storage area.

mpiexec sh -c 'cp ${PJM_LOCALTMP}/result_${PMIX_RANK}.data ${PJM_JOBDIR}/result_${PMIX_RANK}_${PJM_JOBID}.data'

8.4.3. Job submitting option (pjsub --llio)¶

Here describes the option related to node temporary area.

pjsub option |

Description |

|---|---|

localtmp-size=size |

Specify shared temporary area size.

Shared temporary area path name is set to environment variable PJM_LOCALTMP in a job.

|

async-close={on|off} |

Specifies whether to close the node temporary area file asynchronously or not.

Specify synchronous / asynchronous close from the cache in the compute node to the temporary area in the node.

on: Asynchronous close

off: Synchronous close (Default value)

|

perf[,perf-path=path] |

Output LLIO performance information to the file.

The output destination is under the current directory when the job is submitted, and the file name is the name defined by the job ACL function. Also with the parameter

perf-path, target output file can be specified. |

8.4.5. Usage example of node temporary area¶

8.4.5.1. TMPDIR¶

Program performance can be improved by specifying node temporary area to the environment variable TMPDIR. An example is the case of using scratch files in Fortran programs. The /etc/profile provided by the system sets the environment variable HOME to TMPDIR. Changing the program’s IO destination from the second-layer storage area to a faster node temporary area reduces scratch file access time and improves performance.

Here describes a setting example of TMPDIR.

export TMPDIR=$PJM_LOCALTMP

Specify the size of node temporary area to use and submit a job.

#!/bin/bash #PJM --llio localtmp-size=5Gi

8.4.5.2. Unzip of archive files¶

When unzipping an archive file in the node temporary area, performance can be improved by using it with Common file distribution function (llio_transfer). Unzipping an archive file on the second-layer storage from a large number of compute nodes results in intensive access and severely degrades IO performance. You can improve performance by distributing the archive file as a common file and distributing access.

Here describes an example of unzipping an archive file .

llio_transfer ./archive.tar mpiexec sh -c 'if [ ${PLE_RANK_ON_NODE} -eq 0 ]; then \ tar xf ./archive.tar -C $PJM_LOCALTMP; \ fi' llio_transfer --purge ./archive.tar