2. System Configuration¶

2.1. Overview¶

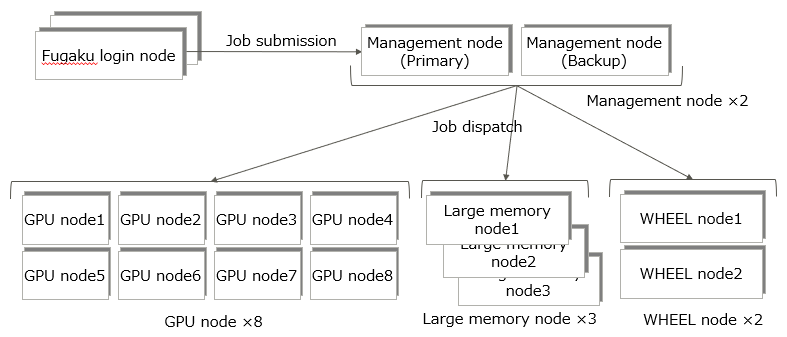

The pre/post environment consists of several nodes as shown in the figure below.

2.2. Compute Nodes¶

GPU node: x86_64-based 2-CPU node with 192GB memory

Large memory node: x86_64-based 4-CPU node with 6TB memory

WHEEL node: x86_64-based 2-CPU node with 256GB memory

Node Type |

Node Name |

|---|---|

GPU node |

pps01 |

GPU node |

pps02 |

GPU node |

pps03 |

GPU node |

pps04 |

GPU node |

pps05 |

GPU node |

pps06 |

GPU node |

pps07 |

GPU node |

pps08 |

Large memory node |

ppm01 |

Large memory node |

ppm02 |

Large memory node |

ppm03 |

WHEEL node |

wheel1 |

WHEEL node |

wheel2 |

2.2.1. GPU Nodes¶

The hardware specifications of a GPU node are as follows.

Server |

FUJITSU Server PRIMERGY RX2540 M5 |

CPU |

Intel Xeon Gold 6240 (2.60GHz/18core), Equipped with 2 |

Memory |

192GB memory (16GB 2933 RDIMM x12) |

System disk |

SAS 600GB/10krpm x2 (RAID1) |

InfiniBand network |

For EDR(100Gbps) x1 |

Ethernet network |

10Gbps(SFP+) x1, 1Gbps(RJ-45) x2 |

GPU accelerator |

NVIDIA Tesla V100(32GB) x2 |

OS |

RHEL 8.10 (kernel 4.18.0-553) |

2.2.2. Large Memory Nodes¶

The hardware specifications of a large memory node are as follows.

Large memory node #1,#2

Server |

FUJITSU Server PRIMERGY RX4770 M5 |

CPU |

Intel Xeon Platinum 8280L (2.70GHz/28core), Equipped with 4 |

Memory |

6,144GB memory (128GB 2933 LRDIMM x48) |

System disk |

SAS 600GB/10krpm x2 (RAID1) |

InfiniBand network |

For EDR(100Gbps) x1 |

Ethernet network |

10Gbps(SFP+) x2, 1Gbps(RJ-45) x4 |

OS |

RHEL 8.10 (kernel 4.18.0-553) |

Large memory node #3

Server |

FUJITSU Server PRIMERGY RX4770 M6 |

CPU |

Intel Xeon Platinum 8360HL (3.00GHz/24core), Equipped with 4 |

Memory |

6,144GB memory (128GB 3200 LRDIMM x48) |

System disk |

SAS 900GB/10krpm x2 (RAID1) |

InfiniBand network |

For EDR(100Gbps) x1 |

Ethernet network |

1Gbps(RJ-45) x4 |

OS |

RHEL 8.10 (kernel 4.18.0-553) |

2.2.3. WHEEL Nodes¶

The hardware specifications of a WHEEL node are as follows.

Server |

FUJITSU Server PRIMERGY RX2540 M6 |

CPU |

Intel Xeon Gold 6338 (2GHz/32core), Equipped with 2 |

Memory |

256GB memory (16GB 3200 RDIMM x 16) |

System disk |

SAS 900GB/10krpm x2 (RAID1) |

InfiniBand network |

For EDR(100Gbps) x1 |

Ethernet network |

10Gbps(SFP+) x1, 1Gbps(RJ-45) x4 |

OS |

RHEL 8.10 (kernel 4.18.0-553) |

2.3. Management Nodes¶

Node Type |

Node Name |

|---|---|

Management node #1 (primary) |

ppctl1 |

Management node #2 (secondary) |

ppctl2 |

2.4. Software¶

The main software components used in the pre/post environment are shown as follows.

OS |

RHEL 8.10 (kernel 4.18.0-553) |

SLURM software |

Slurm version 24.05.3 |

DB software (SLURM) |

MariaDB Server 10.3 |

Fugaku operation software (Use some functions) |

FUJITSU Software Technical Computing Suite V4.0L20A |

CUDA Toolkit |

12.8 |

2.4.1. Compilers¶

GCC, Intel compiler, NVIDIA CUDA Compiler [1] and Fujitsu compiler [2] are available in the pre/post environment.

Name |

Language |

Version |

Command (examples) |

|---|---|---|---|

GCC (RHEL 8.10 Provided as standard) |

Fortran

C

C++

|

8.5.0 20210514 (Red Hat 8.5.0-26)

|

g++

gfortran

gcc

|

Intel oneAPI Base & HPC Toolkit

|

Fortran

C

C++

|

Refer to the FAQ: “Compiler on login node”

|

ifort

icc

icpc

|

NVIDIA CUDA Compiler |

C/C++

|

Build cuda_12.8.r12.8/compiler.35404655_0

|

nvcc

|

Fujitsu compiler (cross compiler) |

Fortran

C

C++

|

Refer to the manual: “Supercomputer Fugaku Users Guide - Language and development environment -”

|

frtpx

fccpx

FCCpx

|